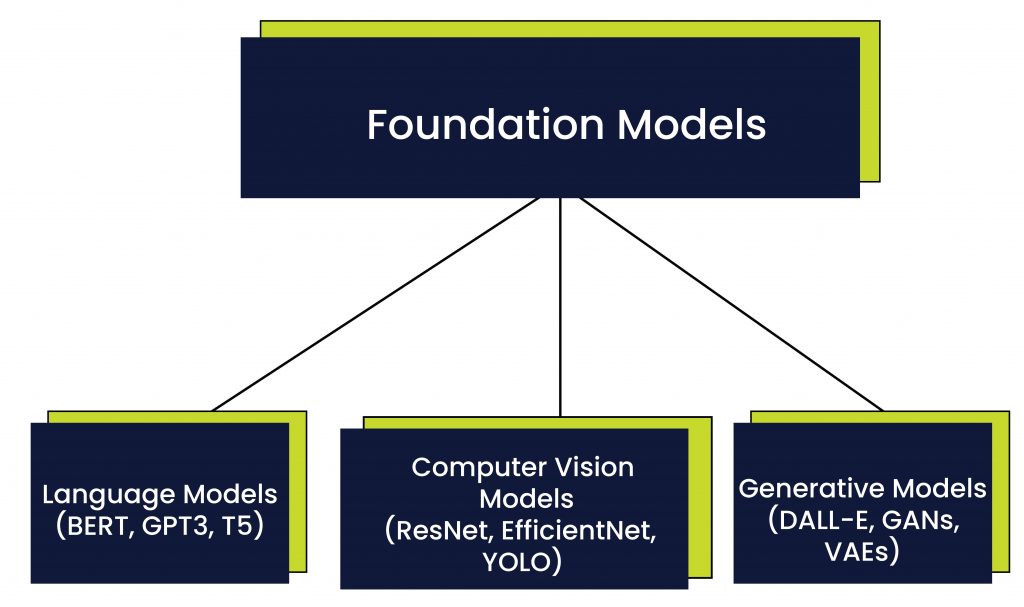

Emergence of Foundation Models: AI’s Leap Towards General IntelligenceEmergence of Foundation Models: AI’s Leap Towards General Intelligence The field of artificial intelligence (AI) has witnessed a paradigm shift with the advent of foundation models. These massive neural networks, trained on vast datasets, have demonstrated remarkable capabilities, heralding a new era of general intelligence in AI. What are Foundation Models? Foundation models, also known as large language models (LLMs), are deep learning architectures with billions or even trillions of parameters. They are typically trained on text or code datasets and exhibit a wide range of language-related abilities, including: * Natural language understanding * Text generation * Translation * Summarization * Question answering Significance of Foundation Models The emergence of foundation models has profound implications for AI: * Extensibility: Foundation models can be fine-tuned or extended to perform specialized tasks, reducing the need for domain-specific training. * Generalization: They possess a broad understanding of the world, enabling them to transfer knowledge across different domains. * Efficiency: Once trained, foundation models can perform a multitude of tasks without requiring extensive retraining. * Scalability: Their massive size allows them to handle complex problems with high accuracy. Applications of Foundation Models Foundation models have opened up a wide range of applications, including: * Natural language processing (NLP): Chatbots, language translation, text summarization * Computer vision: Object recognition, image captioning, medical imaging * Healthcare: Disease diagnosis, personalized treatment recommendations * Finance: Risk assessment, fraud detection, investment analysis * Education: Personalized learning, language instruction, question answering Challenges and Future Directions While foundation models hold immense potential, they also present challenges: * Bias: They can inherit biases from the training data, leading to unfair or inaccurate results. * Interpretability: Their complex architectures make it difficult to understand their reasoning process. * Computational cost: Training and deploying foundation models requires significant resources. Ongoing research focuses on addressing these challenges while exploring new applications and capabilities. Future developments include: * More comprehensive models: Models trained on diverse datasets to broaden their understanding. * Interpretable AI: Techniques to make foundation models more transparent and explainable. * Edge AI: Deploying foundation models on resource-constrained devices for real-time applications. Conclusion The emergence of foundation models marks a transformative leap forward in AI. Their powerful capabilities and broad applicability hold the promise of revolutionizing various industries and improving our lives in countless ways. As research continues to refine and expand their capabilities, we can anticipate even greater advancements in the pursuit of general intelligence.

Posted inNews