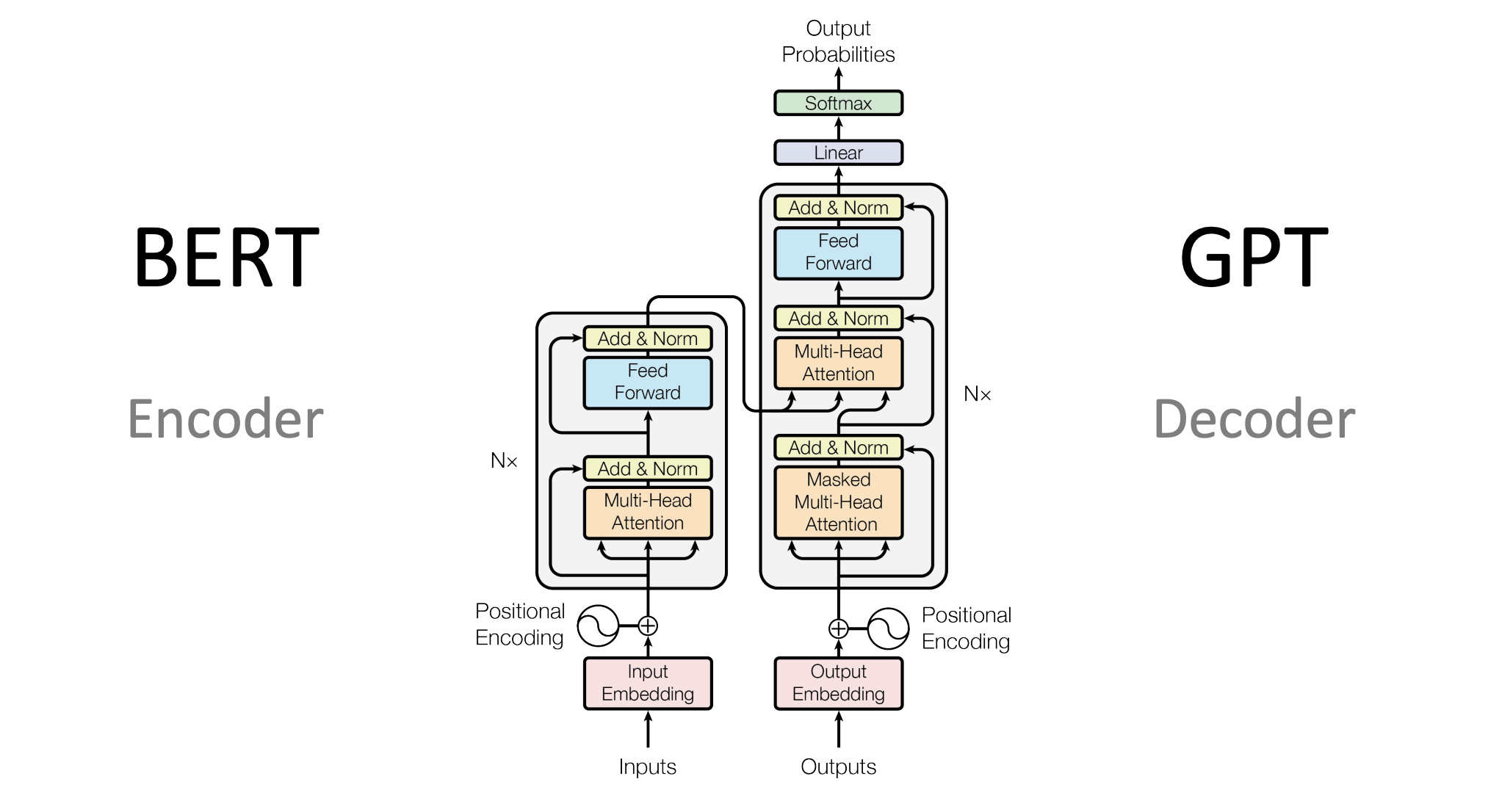

AI Unveils New Horizons in Language Processing with Transformer ArchitecturesAI Unveils New Horizons in Language Processing with Transformer Architectures The advent of transformer architectures has revolutionized the field of natural language processing (NLP). These cutting-edge models leverage attention mechanisms to establish complex relationships between input and output sequences, enabling unprecedented capabilities in language tasks. What are Transformers? Transformers are deep neural networks that employ a novel attention mechanism called the Transformer layer. Unlike traditional recurrent neural networks (RNNs), which process sequences sequentially, transformers process entire sequences in parallel. This allows them to capture long-range dependencies and contextual information more efficiently. Key Advantages of Transformer Architectures * Improved Sequence Modeling: Transformers excel in capturing the intricate relationships between elements within a sequence, making them ideal for tasks like language translation and text summarization. * Parallel Processing: By processing sequences in parallel, transformers can handle large amounts of data much faster than RNNs. * Scalability: Transformer architectures can be easily scaled up to handle increasing data volumes and task complexity. * Versatility: Transformers are not limited to NLP tasks; they have also shown promising results in computer vision, speech recognition, and protein modeling. Applications in NLP Transformer architectures have found widespread applications in various NLP tasks, including: * Machine Translation: Transformers have become the de facto standard for machine translation, achieving state-of-the-art results in multiple language pairs. * Text Summarization: Transformers can generate comprehensive and concise summaries of lengthy text documents. * Question Answering: Transformers can answer questions by extracting relevant information from a given document. * Natural Language Understanding: Transformers facilitate the interpretation of complex language structures and relationships, enabling improved dialogue systems and chatbots. Conclusion Transformer architectures have unlocked new possibilities in language processing. Their parallel processing capabilities, improved sequence modeling, and versatility make them essential tools for handling complex NLP tasks. As research continues, transformer-based models are expected to further advance the capabilities of natural language technologies and play a transformative role in diverse applications.

Posted inNews