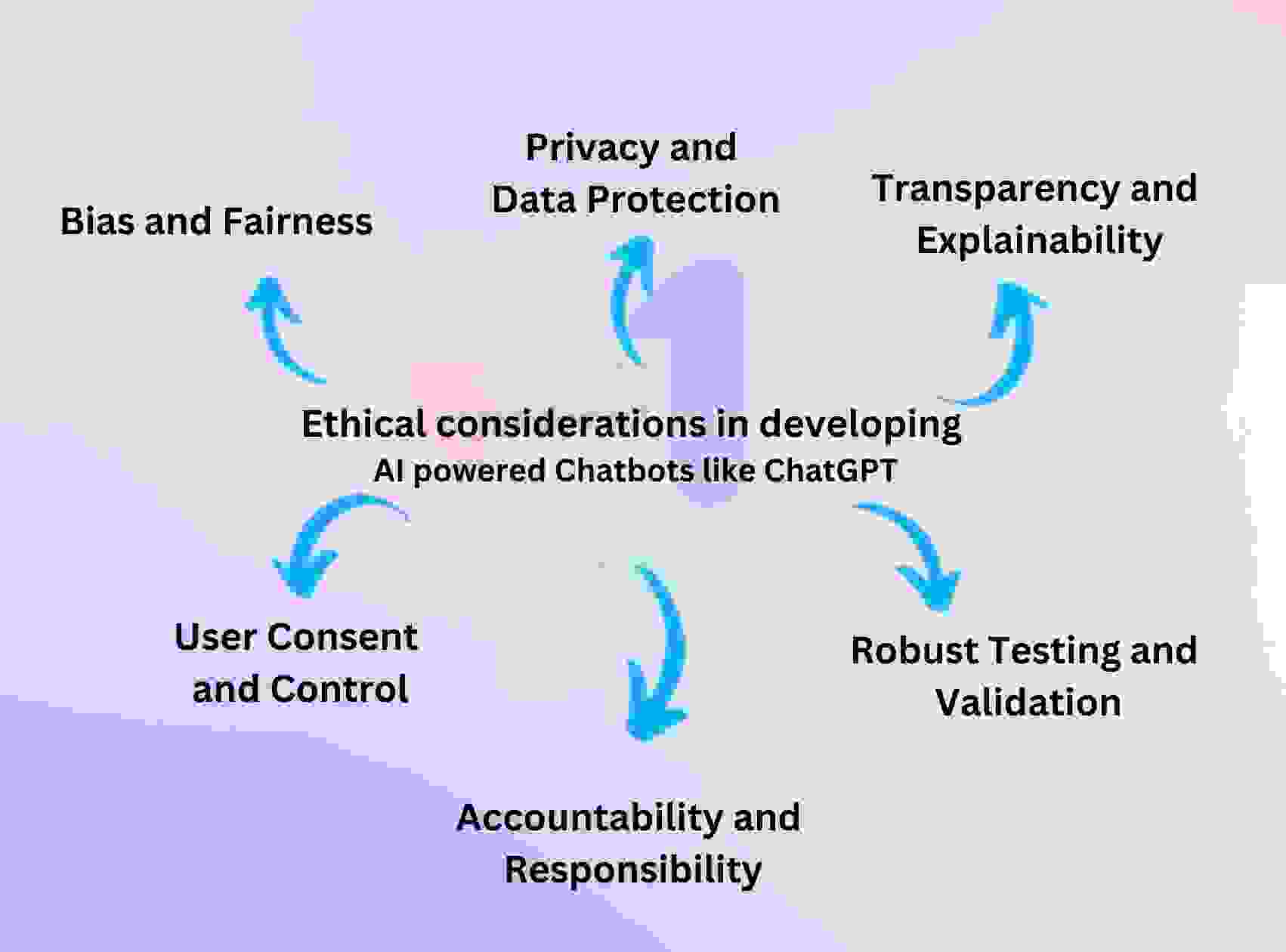

AI-Powered ChatGPT Ignites Ethical Debate on Language Model ResponsibilityAI-Powered ChatGPT Ignites Ethical Debate on Language Model Responsibility The advent of ChatGPT, a powerful AI-driven language model, has sparked an intense ethical debate surrounding the responsibilities and accountability of creators and users of such tools. Concerns about Misinformation and Bias: ChatGPT’s ability to generate seemingly credible text has raised concerns about its potential for spreading misinformation. The model can create convincing content on any topic, even when it lacks factual basis. This poses a risk of perpetuating false narratives and undermining trust in information. Furthermore, ChatGPT’s training data includes vast amounts of text from the internet, which can reflect existing biases. Users must be mindful of how the model’s outputs may reflect or reinforce societal prejudices. Accountability for Harmful Content: The ethical question arises as to who is ultimately responsible for harmful content generated by language models like ChatGPT. Developers argue that the tool is merely a tool, and users are responsible for the content they create using it. However, critics contend that creators have an obligation to ensure that their products are not used for malicious purposes. Impact on Education and Employment: ChatGPT’s proficiency in generating human-like text raises concerns for the future of education and employment. Students may use it to complete assignments or write essays, while professionals could potentially replace their writing tasks with AI-generated content. This raises questions about the authenticity and originality of work and the potential for an unfair advantage in competitive situations. Addressing Ethical Concerns: To address these ethical concerns, stakeholders have proposed various measures, including: * Transparency and Education: Developers should provide clear guidelines on the model’s capabilities and limitations to ensure responsible use. * Ethical Guidelines: Establishing ethical guidelines for using language models to prevent their misuse for harmful purposes. * Fact-Checking Mechanisms: Integrating fact-checking tools into ChatGPT or developing complementary systems to mitigate the spread of misinformation. * User Education: Educating users about the potential risks and benefits of using language models to make informed decisions. The ethical debate surrounding ChatGPT is ongoing, with no easy answers. As language models become increasingly sophisticated, it is crucial to strike a balance between innovation and responsible use to ensure that these powerful tools contribute positively to society.

Posted inNews